Jan 27, 2026

Why AI-SRE Needs Topology, Not Just Telemetry

Article Series

0 min read

Share:

Why Telemetry Breaks Down at Scale

AI-SRE has gained significant attention lately. The promise is compelling: using AI to assist with detection and triage so engineers don’t have to wake up at 3AM anymore. As a result, most observability providers now offer AI-driven capabilities to automate parts of incident response.

However, core SRE tasks such as tracing failures to root causes, predicting what a change will break, and understanding which customers are affected cannot be solved with logs and metrics alone (see Google's SRE Book).

They require topology, not just telemetry. In November 2025, a minor internal change at Cloudflare caused a cascading outage across multiple products. Engineers detected the failures quickly, but tracing them back to the original cause took hours because the dependency chain was unclear.

MELT (Metrics, Events, Logs, Traces) data showed something was wrong; topology would have shown what broke, what else was affected, and what to fix.

Why AI-SRE needs topology for context

Context Engineering is the discipline of feeding LLMs the right data, at the right time, and in the right format. For AI-SRE, this context is critical to reasoning about blast radius, ownership, and customer impact. Here, topology means a continuously updated, queryable graph of infrastructure and application dependencies, enriched with ownership, change history, and business metadata.

An LLM with only logs and metrics can’t answer key questions:

What else depends on this? What's the blast radius?

Which customers are affected?

The same LLM, with a structured graph of dependencies and changes, can:

Traverse the dependency graph to identify affected resources

Correlate deployment timing with error spikes

List customer accounts tied to impacted services

The solution: modeling your infrastructure as a graph. Resources are nodes, dependencies are edges. This structure is what makes topology queryable. When you ask "what's the blast radius?" this becomes a standard graph traversal.

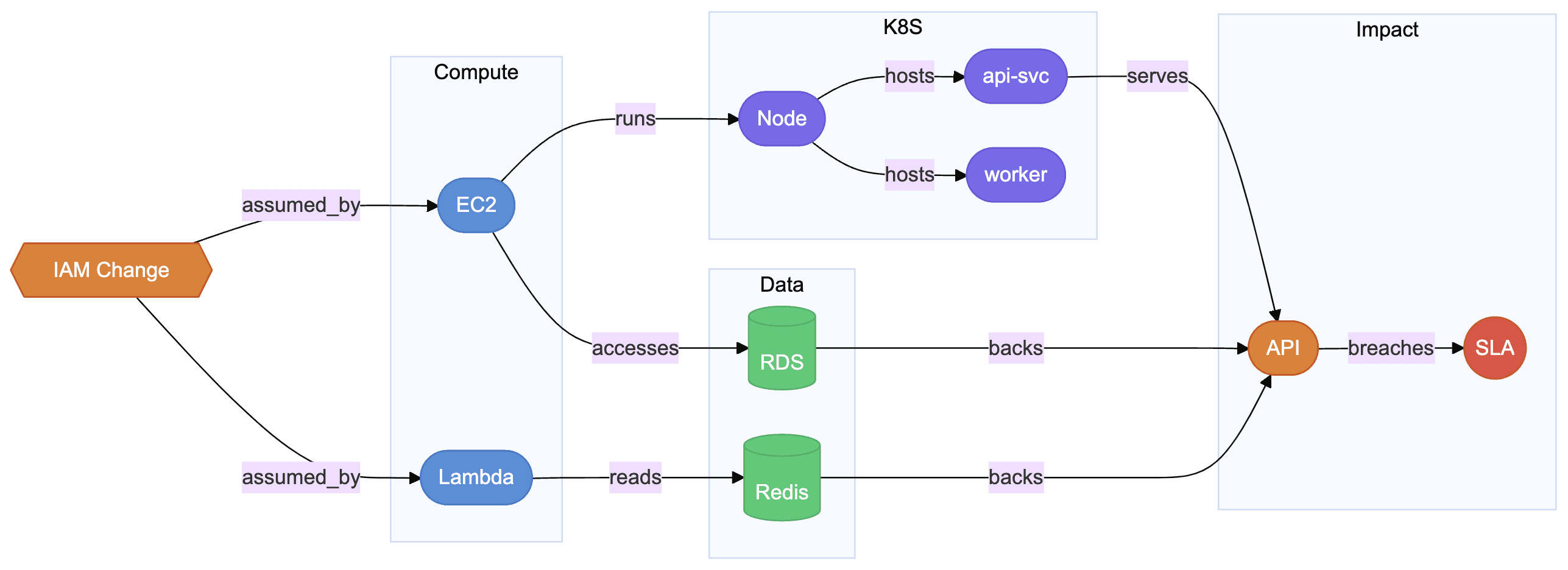

Example: what breaks when an IAM role change affects access?

A change to the prod-rds-access IAM role can cascade from the payment-api pod to the service layer and its 99.99% SLA. (What it still can’t do is replace human judgment; it can only reason within the context it’s given)

How to build the graph?

Your system is already a web of entities and relationships, so represent it as one.

Nodes (examples):

EC2 instances, IAM roles, security groups

Kubernetes nodes, pods, deployments

services, databases, queues, buckets

Edges (examples):

EC2 ASSUMES IAM Role

Node HOSTS Pod

Service A CALLS Service B

Pod SCHEDULED_ON Node

Subnet PART_OF VPC

What to include in your infrastructure graph

1. Provisioned data

The static source of truth: resources, dependencies, ownership, environments. This is the "skeleton" of your infrastructure.

Ex: EC2 instance i-02abc123 connected to VPC vpc-07def456 or Kubernetes pod payment-api-7d4f9 running on node ip-10-0-3-42.

2. Infrastructure as Code

IaC templates and state files that provision infrastructure. These define cloud resources like VPCs, EC2 instances, IAM roles, security groups.

Ex: Terraform state shows EC2 instance i-02abc123 was created by resource aws_instance.web_server in module frontend-infra.

3. Application code and config

Application manifests, container images, Kubernetes deployments, Helm charts. These define what runs ON the infrastructure: services, pods, containers.

Ex: Git commit 4e1f2a deployed container payment-api:v2.3.1 via Helm chart, which created pod payment-api-7d4f9.

4. Just-in-time telemetry

Live signals from Datadog, CloudWatch, Kubernetes object states, deployment streams. Attach each signal to the relevant components so changes clearly show their impact. Think of the graph as the skeleton and telemetry as the flesh: you only need durable links that tell you what the signal touches and when.

Ex: Datadog error spike linked to frontend-api after deploy deploy-2025-10-15T22:01Z.

5. Operational and business metadata

Ticket IDs linked to services, SLA definitions attached to components, contract renewal dates tied to customers. If a fact has a system of record and a stable identifier, model it in the graph. If its value is explanation or judgment (runbooks, postmortems), keep it as a document and attach it with a typed link.

Ex: Jira ticket DEVOPS-456 linked to service/payment-api with SLA 99.9% and customer AcmeCorp.

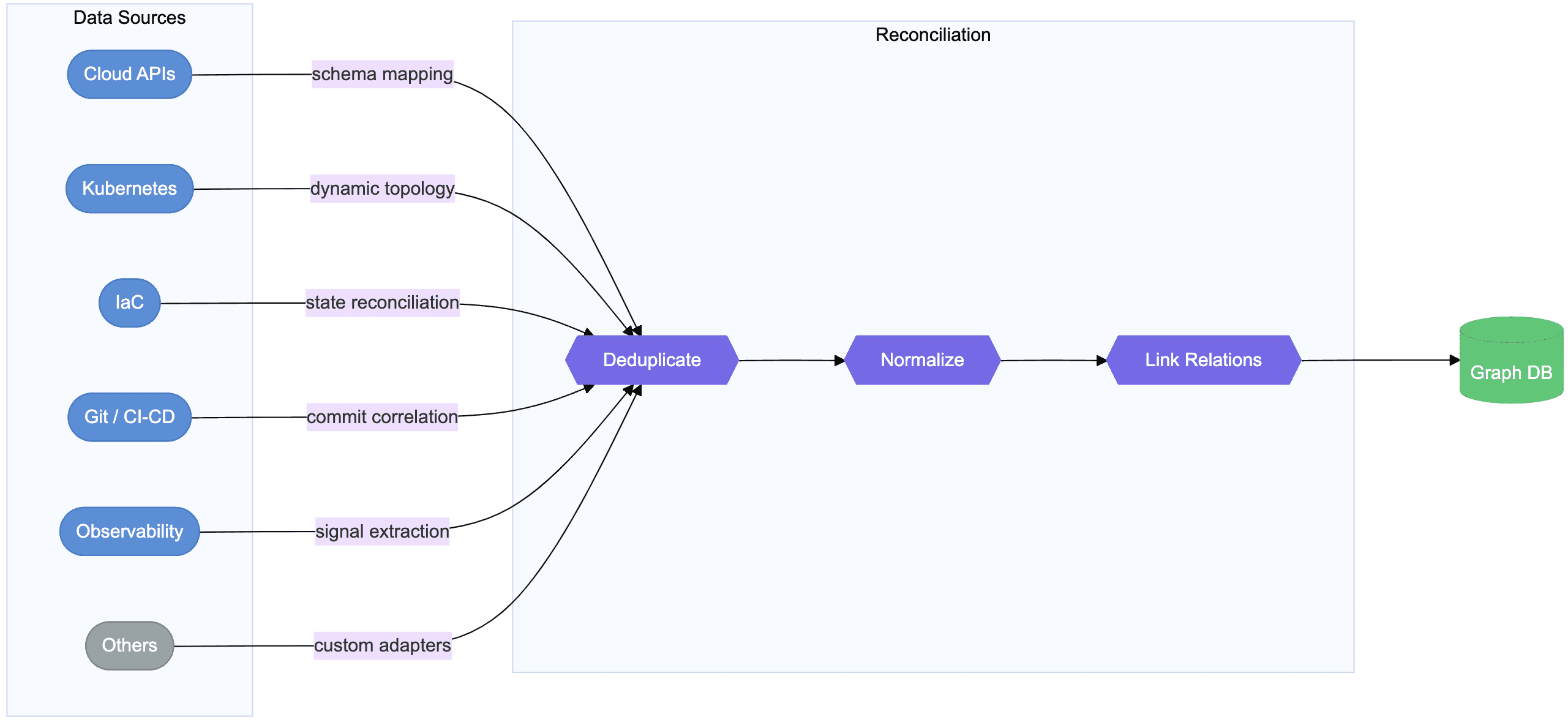

How infrastructure data gets reconciled into a dependency graph

Why it’s hard (and where it can fail)

The graph sounds elegant on paper, but building it in production is hard. A topology graph that is stale or partial can mislead incident response just as easily as missing data.

1. Graph freshness is not guaranteed

Infrastructure changes constantly. Cloud APIs are rate-limited, eventually consistent, and scattered across accounts and regions. Without explicit freshness guarantees, a graph may describe what used to exist rather than what is failing now: causing wrong blast radius estimates or missed dependencies during incidents.

2. Topology is always incomplete

Some dependencies are implicit, dynamic, or hidden in application logic instead of infrastructure APIs. Serverless flows, async queues, feature flags, and conditional routing often escape static discovery. Even with strong discovery pipelines, the model can only reflect “what we currently know depends on this,” not an absolute truth.

3. Partial graphs can create false confidence

A tidy graph can look authoritative even when key edges are missing. That’s risky. During incidents, teams may trust an incomplete blast radius, delay escalation, or misjudge customer impact because the graph appears complete when it isn’t.

4. Querying the graph safely is non-trivial

Even with solid data, incorrect queries (especially by AI agents) can skip dependencies or over-expand context. Without scoped traversal and guardrails, the graph can either miss impact or flood responders with noise.

Do you actually need a context graph?

Skip the graph if:

You have fewer than 20-30 services

Your infrastructure is single-cloud, single-region

Your team can mentally map the critical paths (< 5 people, low turnover)

Incidents are rare and low-stakes (no SLA commitments, small user base) -> In that case, a bunch of MCP is more than enough!

Consider it if:

Dependencies span multiple clouds, clusters, or teams

No single person understands the full system

Mean time to recovery is measured in hours because you're searching for the problem

Compliance or SLA penalties make incidents expensive

Prove impact:

If you invest in a context graph, keep the scope driven by operational needs. The goal is simple: during an incident, can your team or an AI agent identify impact and ownership in under two minutes?

Teams typically either extend existing tools, build a graph in-house, or use an external platform. The right choice depends on internal expertise, tolerance for maintenance, and whether faster incident understanding materially changes outcomes for your business.

What you can do tomorrow (even without a graph)!:

Add owner tags to every service.

Surface recent changes. Make deployments, config updates, and infra changes from the last 24 hours easy to inspect.

For key services, maintain a short list of hard dependencies that define blast radius. Link services to impact. Know which services map to which customers and SLAs.